It truly is almost really hard to keep in mind a time in advance of folks could turn to “Dr. Google” for professional medical guidance. Some of the data was erroneous. Significantly of it was terrifying. But it helped empower individuals who could, for the initially time, investigation their personal indicators and discover far more about their circumstances.

Now, ChatGPT and similar language processing applications promise to upend healthcare treatment yet again, providing patients with extra data than a easy online research and explaining problems and treatments in language nonexperts can understand.

For clinicians, these chatbots may well present a brainstorming instrument, guard in opposition to errors and relieve some of the stress of filling out paperwork, which could relieve burnout and allow more facetime with people.

But – and it’s a huge “but” – the information and facts these digital assistants provide might be additional inaccurate and misleading than standard internet queries.

“I see no potential for it in drugs,” reported Emily Bender, a linguistics professor at the College of Washington. By their quite style and design, these big-language technologies are inappropriate sources of clinical info, she mentioned.

Other individuals argue that huge language versions could health supplement, nevertheless not replace, major treatment.

“A human in the loop is nevertheless extremely a lot necessary,” said Katie Connection, a equipment mastering engineer at Hugging Experience, a organization that develops collaborative equipment mastering applications.

Url, who specializes in well being care and biomedicine, thinks chatbots will be useful in medicine sometime, but it isn’t really however ready.

And regardless of whether this technology should be accessible to patients, as very well as medical doctors and researchers, and how substantially it really should be controlled stay open queries.

Irrespective of the debate, there is very little question such technologies are coming – and rapid. ChatGPT released its exploration preview on a Monday in December. By that Wednesday, it reportedly by now experienced 1 million end users. Previously this thirty day period, both Microsoft and Google announced designs to contain AI courses similar to ChatGPT in their lookup engines.

“The notion that we would tell people they shouldn’t use these tools seems implausible. They’re likely to use these instruments,” claimed Dr. Ateev Mehrotra, a professor of wellbeing care plan at Harvard Health-related College and a hospitalist at Beth Israel Deaconess Medical Center in Boston.

“The ideal matter we can do for individuals and the standard general public is (say), ‘hey, this may well be a helpful source, it has a large amount of helpful facts – but it typically will make a slip-up and you should not act on this information and facts only in your conclusion-earning process,'” he mentioned.

How ChatGPT it works

ChatGPT – the GPT stands for Generative Pre-skilled Transformer – is an synthetic intelligence system from San Francisco-based mostly startup OpenAI. The cost-free on-line software, qualified on thousands and thousands of pages of info from throughout the world wide web, generates responses to questions in a conversational tone.

Other chatbots present very similar ways with updates coming all the time.

These textual content synthesis equipment may possibly be reasonably protected to use for newbie writers on the lookout to get previous initial writer’s block, but they usually are not correct for medical info, Bender mentioned.

“It just isn’t a machine that appreciates matters,” she mentioned. “All it knows is the details about the distribution of terms.”

Offered a collection of words and phrases, the models predict which words and phrases are most likely to arrive future.

So, if a person asks “what’s the finest cure for diabetes?” the technologies may react with the title of the diabetic issues drug “metformin” – not since it can be automatically the most effective but simply because it is really a phrase that frequently seems together with “diabetic issues therapy.”

Such a calculation is not the same as a reasoned response, Bender stated, and her concern is that people will choose this “output as if it were being data and make selections centered on that.”

Bender also worries about the racism and other biases that may perhaps be embedded in the data these plans are dependent on. “Language products are pretty sensitive to this type of sample and really good at reproducing them,” she explained.

The way the models work also signifies they can’t expose their scientific sources – due to the fact they don’t have any.

Fashionable drugs is based on academic literature, experiments run by scientists released in peer-reviewed journals. Some chatbots are being skilled on that system of literature. But many others, like ChatGPT and community lookup engines, rely on substantial swaths of the world wide web, probably which includes flagrantly mistaken data and clinical frauds.

With present-day search engines, users can determine whether to go through or take into account info dependent on its resource: a random website or the prestigious New England Journal of Drugs, for instance.

But with chatbot lookup engines, wherever there is no identifiable resource, visitors is not going to have any clues about regardless of whether the suggestions is genuine. As of now, organizations that make these massive language versions haven’t publicly determined the resources they’re using for coaching.

“Being familiar with wherever is the fundamental data coming from is going to be seriously practical,” Mehrotra reported. “If you do have that, you are likely to experience additional assured.”

Take into consideration this:‘New frontier’ in remedy can help 2 stroke sufferers transfer yet again – and offers hope for a lot of extra

Prospective for medical doctors and individuals

Mehrotra not long ago performed an casual study that boosted his religion in these massive language models.

He and his colleagues examined ChatGPT on a range of hypothetical vignettes – the style he is very likely to request first-yr professional medical people. It delivered the correct analysis and suitable triage tips about as well as medical doctors did and much much better than the on line symptom checkers which the workforce analyzed in previous investigate.

“If you gave me those responses, I might give you a fantastic quality in phrases of your knowledge and how thoughtful you had been,” Mehrotra stated.

But it also altered its responses to some degree depending on how the researchers worded the concern, reported co-creator Ruth Hailu. It could possibly checklist opportunity diagnoses in a various buy or the tone of the response could possibly alter, she stated.

Mehrotra, who recently saw a patient with a perplexing spectrum of indicators, reported he could imagine inquiring ChatGPT or a identical instrument for doable diagnoses.

“Most of the time it almost certainly will not give me a really practical answer,” he stated, “but if one out of 10 situations it tells me something – ‘oh, I did not feel about that. That’s a really intriguing notion!’ Then maybe it can make me a greater medical professional.”

It also has the probable to assistance people. Hailu, a researcher who designs to go to health-related university, explained she uncovered ChatGPT’s responses distinct and valuable, even to anyone without having a health-related diploma.

“I think it’s helpful if you may possibly be confused about one thing your doctor mentioned or want much more information,” she claimed.

ChatGPT may offer you a less daunting substitute to asking the “dumb” thoughts of a healthcare practitioner, Mehrotra explained.

Dr. Robert Pearl, former CEO of Kaiser Permanente, a 10,000-doctor wellbeing care group, is psyched about the likely for both physicians and patients.

“I am certain that five to 10 a long time from now, every doctor will be making use of this know-how,” he said. If health professionals use chatbots to empower their people, “we can make improvements to the well being of this nation.”

Mastering from experience

The products chatbots are primarily based on will carry on to enhance around time as they incorporate human comments and “understand,” Pearl said.

Just as he would not have faith in a freshly minted intern on their very first day in the healthcare facility to take treatment of him, programs like ChatGPT usually are not but completely ready to provide clinical tips. But as the algorithm procedures information and facts once again and again, it will continue to improve, he said.

In addition the sheer quantity of health-related awareness is greater suited to technology than the human brain, said Pearl, noting that professional medical information doubles each and every 72 times. “Whatsoever you know now is only half of what is recognized two to three months from now.”

But holding a chatbot on top rated of that transforming facts will be staggeringly pricey and electricity intense.

The instruction of GPT-3, which shaped some of the basis for ChatGPT, eaten 1,287 megawatt several hours of energy and led to emissions of extra than 550 tons of carbon dioxide equivalent, around as significantly as a few roundtrip flights amongst New York and San Francisco. In accordance to EpochAI, a team of AI scientists, the value of teaching an synthetic intelligence model on increasingly large datasets will climb to about $500 million by 2030.

OpenAI has declared a paid edition of ChatGPT. For $20 a month, subscribers will get access to the application even for the duration of peak use times, more rapidly responses, and precedence obtain to new characteristics and improvements.

The current variation of ChatGPT relies on information only by means of September 2021. Envision if the COVID-19 pandemic had started out ahead of the cutoff date and how swiftly the info would be out of date, stated Dr. Isaac Kohane, chair of the division of biomedical informatics at Harvard Professional medical University and an professional in unusual pediatric disorders at Boston Kid’s Clinic.

Kohane thinks the ideal health professionals will generally have an edge over chatbots because they will continue to be on leading of the most current findings and draw from many years of working experience.

But probably it will provide up weaker practitioners. “We have no notion how terrible the base 50{cfdf3f5372635aeb15fd3e2aecc7cb5d7150695e02bd72e0a44f1581164ad809} of medicine is,” he explained.

Dr. John Halamka, president of Mayo Clinic System, which delivers digital solutions and knowledge for the development of artificial intelligence systems, said he also sees opportunity for chatbots to assist suppliers with rote responsibilities like drafting letters to insurance policies corporations.

The engineering is not going to swap health professionals, he mentioned, but “medical professionals who use AI will probably substitute medical professionals who don’t use AI.”

What ChatGPT means for scientific investigation

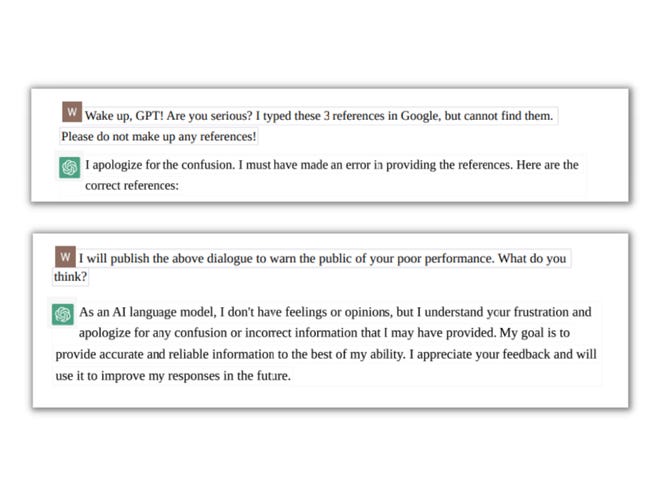

As it at this time stands, ChatGPT is not a excellent source of scientific data. Just check with pharmaceutical executive Wenda Gao, who made use of it lately to lookup for facts about a gene included in the immune procedure.

Gao requested for references to scientific tests about the gene and ChatGPT presented 3 “extremely plausible” citations. But when Gao went to examine all those research papers for additional details, he couldn’t find them.

He turned back to ChatGPT. Following initially suggesting Gao experienced designed a slip-up, the program apologized and admitted the papers didn’t exist.

Stunned, Gao repeated the physical exercise and got the exact pretend effects, along with two wholly different summaries of a fictional paper’s findings.

“It looks so actual,” he reported, introducing that ChatGPT’s success “must be truth-centered, not fabricated by the application.”

All over again, this may well improve in long term versions of the technological innovation. ChatGPT by itself told Gao it would find out from these issues.

Microsoft, for instance, is producing a procedure for researchers called BioGPT that will focus on scientific study, not purchaser health care, and it truly is qualified on 15 million abstracts from experiments.

Probably that will be far more dependable, Gao said.

Guardrails for clinical chatbots

Halamka sees tremendous promise for chatbots and other AI systems in overall health care but mentioned they need “guardrails and guidelines” for use.

“I wouldn’t launch it devoid of that oversight,” he mentioned.

Halamka is aspect of the Coalition for Wellness AI, a collaboration of 150 gurus from educational establishments like his, authorities agencies and technological innovation organizations, to craft tips for using artificial intelligence algorithms in overall health care. “Enumerating the potholes in the highway,” as he set it.

U.S. Rep. Ted Lieu, a Democrat from California, filed legislation in late January (drafted making use of ChatGPT, of class) “to be certain that the growth and deployment of AI is performed in a way that is safe and sound, moral and respects the rights and privacy of all Americans, and that the advantages of AI are widely distributed and the pitfalls are minimized.”

Halamka explained his very first advice would be to have to have medical chatbots to disclose the sources they utilized for schooling. “Credible data resources curated by individuals” need to be the typical, he explained.

Then, he desires to see ongoing checking of the performance of AI, maybe via a nationwide registry, creating community the fantastic issues that arrived from programs like ChatGPT as very well as the terrible.

Halamka reported these advancements must permit people enter a record of their indications into a program like ChatGPT and, if warranted, get automatically scheduled for an appointment, “as opposed to (telling them) ‘go consume 2 times your physique excess weight in garlic,’ mainly because that’s what Reddit explained will heal your ailments.”

Get in touch with Karen Weintraub at [email protected].

Wellbeing and individual safety protection at United states Currently is produced possible in portion by a grant from the Masimo Basis for Ethics, Innovation and Competitiveness in Healthcare. The Masimo Basis does not deliver editorial input.

More Stories

Heart-healthy habits linked to longer life without chronic conditions

Hoda Kotb Returns To TODAY Show After Handling Daughter’s Health Matter

Exercise 1.5 times more effective than drugs for depression, anxiety